Harvard released a study on ChatGPT — here are my tips you can use today

1.1 million ChatGPT chats reveal the 3 ways most people actually use AI (and the trick you’re missing)

OpenAI, Duke & Harvard University released a paper this week called:

“How People Use ChatGPT” and you can find it here. I love this paper and David Ogilvy would approve of the brilliant title.

A quick note

We are going to run through this paper and then at the end of the article, I’ve got two tips for you to use this week that should help you with 80% of your queries.

AI is growing much like the early internet

Jeff Bezos quit his job at the hedge fund to start Amazon and said this:

"I saw the internet was growing at 2300% a year and I realized nothing outside of a petri dish grows that fast"

Jeff Bezos

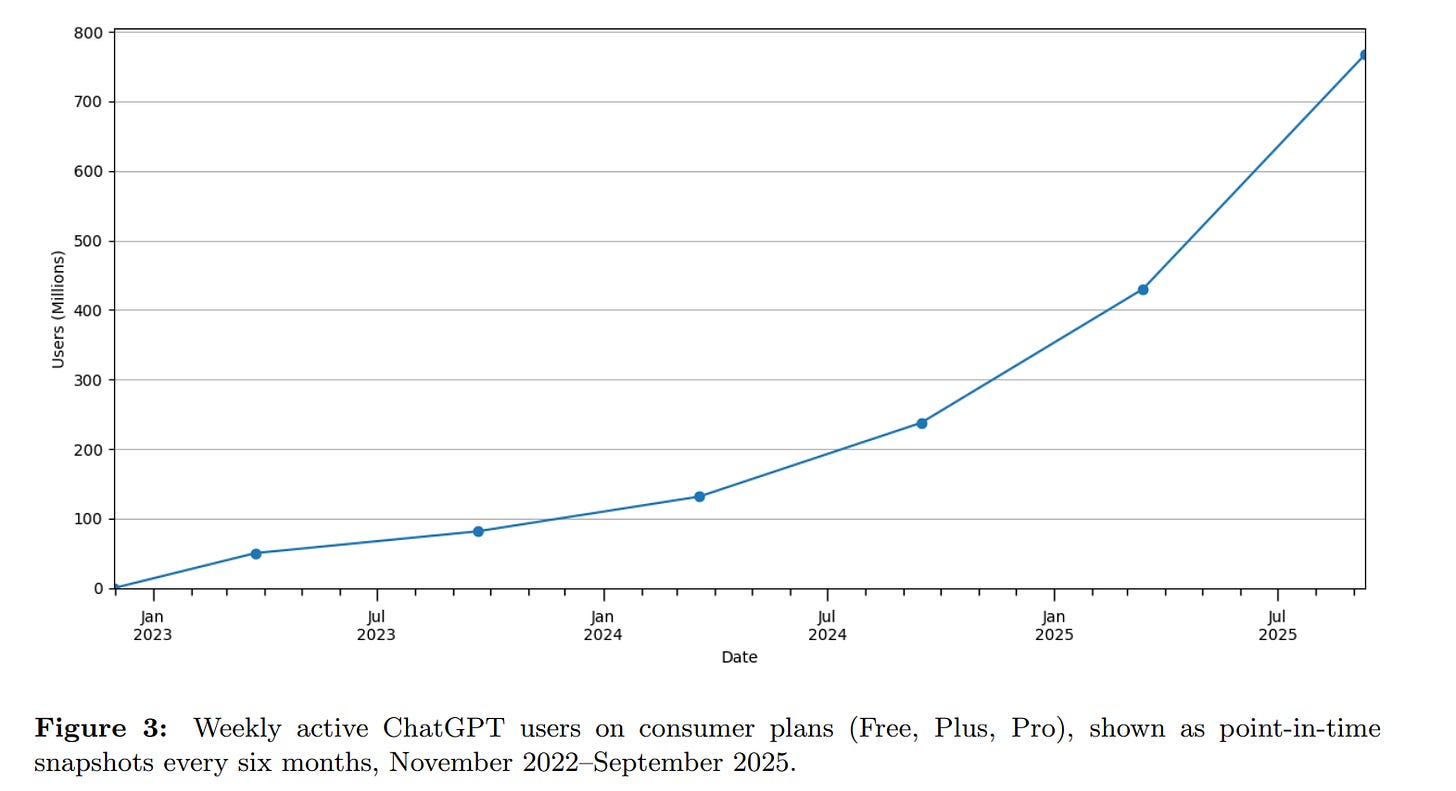

ChatGPT is used by 10% of the entire world’s adult population every single week with close to 800 million weekly active users.

Look at this usage growth chart. I’d describe it as “hedge fund quittable”. So if you are currently reading this and you are working for a hedge fund, then please quit now.

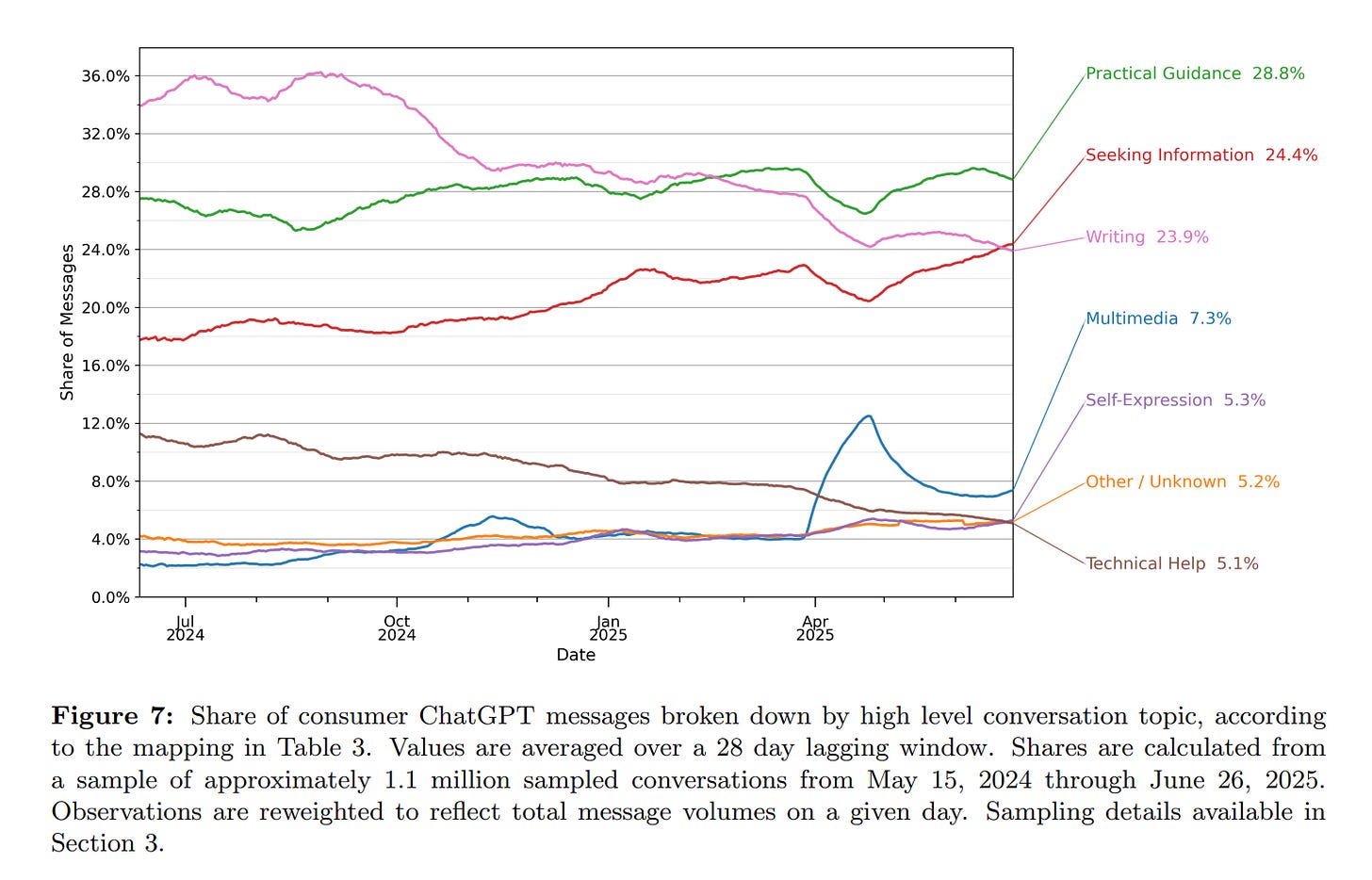

Now that’s what we call a sample size

Everything’s bigger in Texas as they say and everything’s bigger when it comes to AI.

This study had just a tiny sample of 1.1 million conversations from 130,000 users. This is so much better than some of the athletics studies I read where the sample size is like Peter from accounts and 3 random people.

Surely this sample was so big that it’s impossible to analyse?

Wait—what’s that? Do you hear it?

Cue the intro music

Well well well, if it isn’t our old friend, the 80/20 rule.

The entirety of human conversation—nuance, jokes, questions, arguments, advice—fed into the machine. No way the 80/20 rule survives this, right?

*BODY SLAM*

And there it is: 1.1 million ChatGPT chats smashed into submission. The 80/20 rule climbs out of the wreckage holding the belt. Of course it does. Of course the entirety of human consciousness is an 80/20 rule. I mean why would it not be.

80% of the time is spent on:

Practical Guidance (28.8%)

Seeking information (24.4%)

Writing (23.9%)

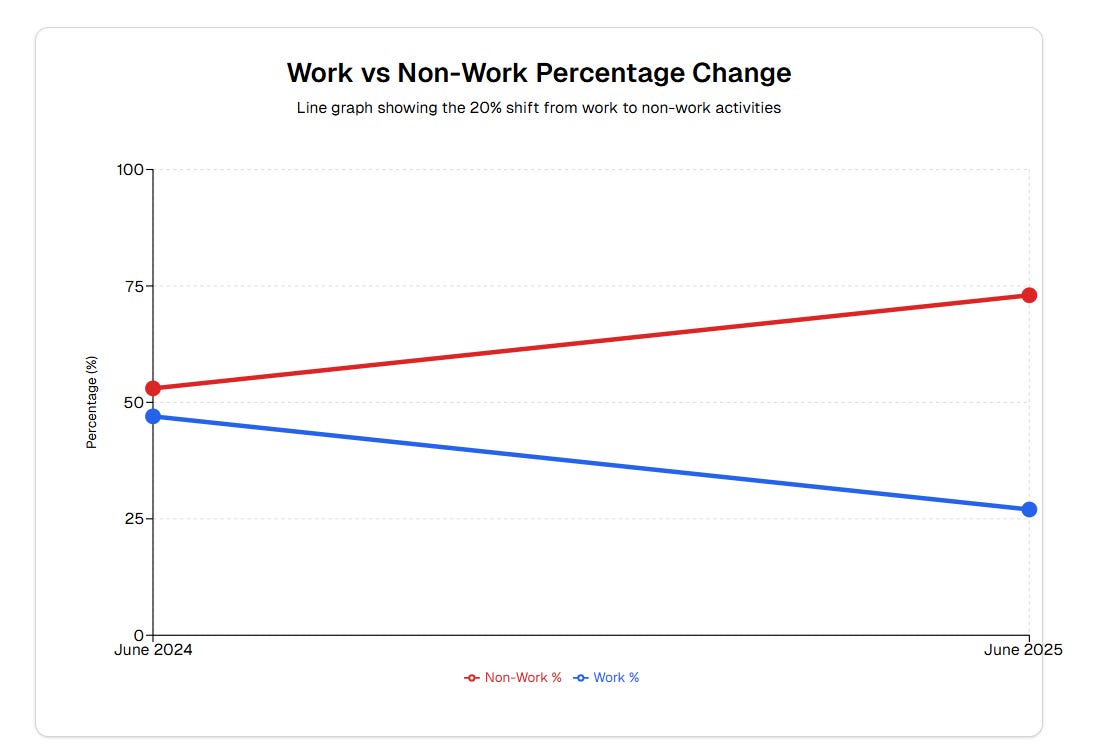

Comparing work usage vs non work usage

This part of the paper surprised me.

Look at the graph below and the trend is quite amazing, non-work use has been increasing over the past year.

June 2024: 53% non-work, 47% work

June 2025: 73% non-work, 27% work

This is a massive change in just one year - non-work usage grew much faster than work usage.

Key insights about this split

1. It's driven by existing users, not new ones

The paper specifically notes this shift is "primarily due to changing usage within each cohort of users rather than a change in the composition of new ChatGPT users."

This means people who initially used ChatGPT for work are increasingly using it for personal reasons - they're finding more value in non-work applications.

I find this fascinating as this shows that AI is one of the mega technologies. It’s the speed of transition that really stands out.

ChatGPT: 53% → 73% non-work in just one year

Google took 5-7 years to become mainstream

The internet took decades

2. Both are growing, but non-work is accelerating

Work usage is still growing in absolute terms

But non-work usage is growing much faster

Total daily messages went from 451M to 2.6B (5.8x growth)

One of the quotes I found particularly interesting:

Overall, our findings suggest that ChatGPT has a broad-based impact on the global economy. The fact that non-work usage is increasing faster suggests that the welfare gains from generative AI usage could be substantial. Collis and Brynjolfsson (2025) estimate that US users would have to be paid $98 to forgo using generative AI for a month, implying a surplus of at least $97 billion a year.

This is Harvard and Duke university remember, not a hype article. Those are mind boggling numbers for such a new technology.

10% of the world’s population would need to be paid $100 a month to forego generative AI for a month.

Another key thing that stood out is that there was a remarkable similarity across occupations in how ChatGPT is used for work.

As a consultant I can 100% verify that this is true to my experience. I think that should really encourage you that we as humans all struggle with similar things independent of what industries we are in. It also means that I’d be upping my AI training budget for 2026 with some courses as pretty much any course is going to benefit your staff in both their personal and professional lives.

What this means

1. AI is becoming a life tool, not just a work tool

I think all people that work and are in a management position should highlight this. Basically, every staff member is going home and using this tool every single day. It means that most of your staff members are probably far more skilled at using AI than they are letting on.

This is an incredibly powerful force that will continue to compound over time.

Not only that but 46% of all usage is from people under 26 years of age. I believe strongly that your value with AI is directly tied to the number of reps that you put in, so I’d like to see more young people on these AI boards and governance groups.

2. The value may be underestimated

If most economic analysis focuses on workplace productivity, but 73% of usage is non-work, we might be dramatically underestimating AI's total economic impact.

3. You start using it to write your emails, then it becomes your best friend at home

People start with work applications (more obvious use cases) but over time discover personal applications that may be even more valuable to them.

I want to help you with AI now

So now I can see that you are all seeking information and need help with writing, I wanted to give you two things to help you use AI better for those 80/20 tasks.

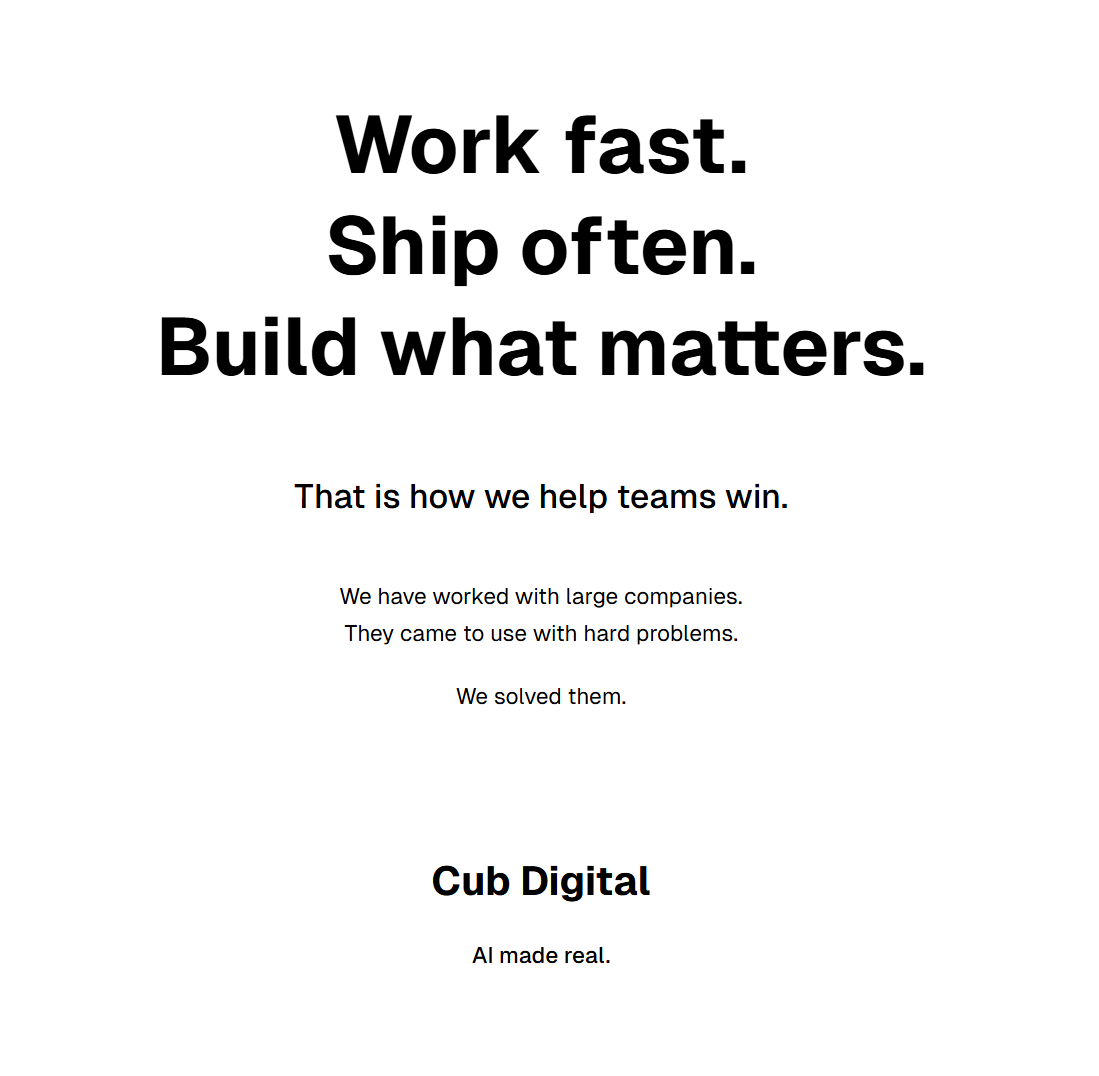

Better living everyone tip #1 - Stand on the shoulder of giants

I like Ernest Hemingway and some of his quotes are the best.

“You know what makes a good loser? Practice.

Ernest Hemingway

Instead of telling AI what to do and how to write, e.g

“Write in a style that’s clear, short, with no emojis….”

I get it to take the equivalent of a pre-workout at the gym.

Step 1 - start with a prompt like this

Don’t start with what you are writing, but first get ChatGPT to list the tips of writing in the style you like.

It will reply with a bunch of cool tips.

Step 2 - Ask it to write in that style for whatever you are doing

Now that you have primed the context with Hemingway’s style, you then get the AI to write your copy. I find this is better than saying “Write a document in the style of x” as it focuses more on the document rather than the style when you do that.

I asked it to write some copy about Cub Digital in that style of Hemingway. Here’s what it made.

As you can see, it’s a great way to get effective copy (as opposed to telling AI what not to do, e.g no emojis.)

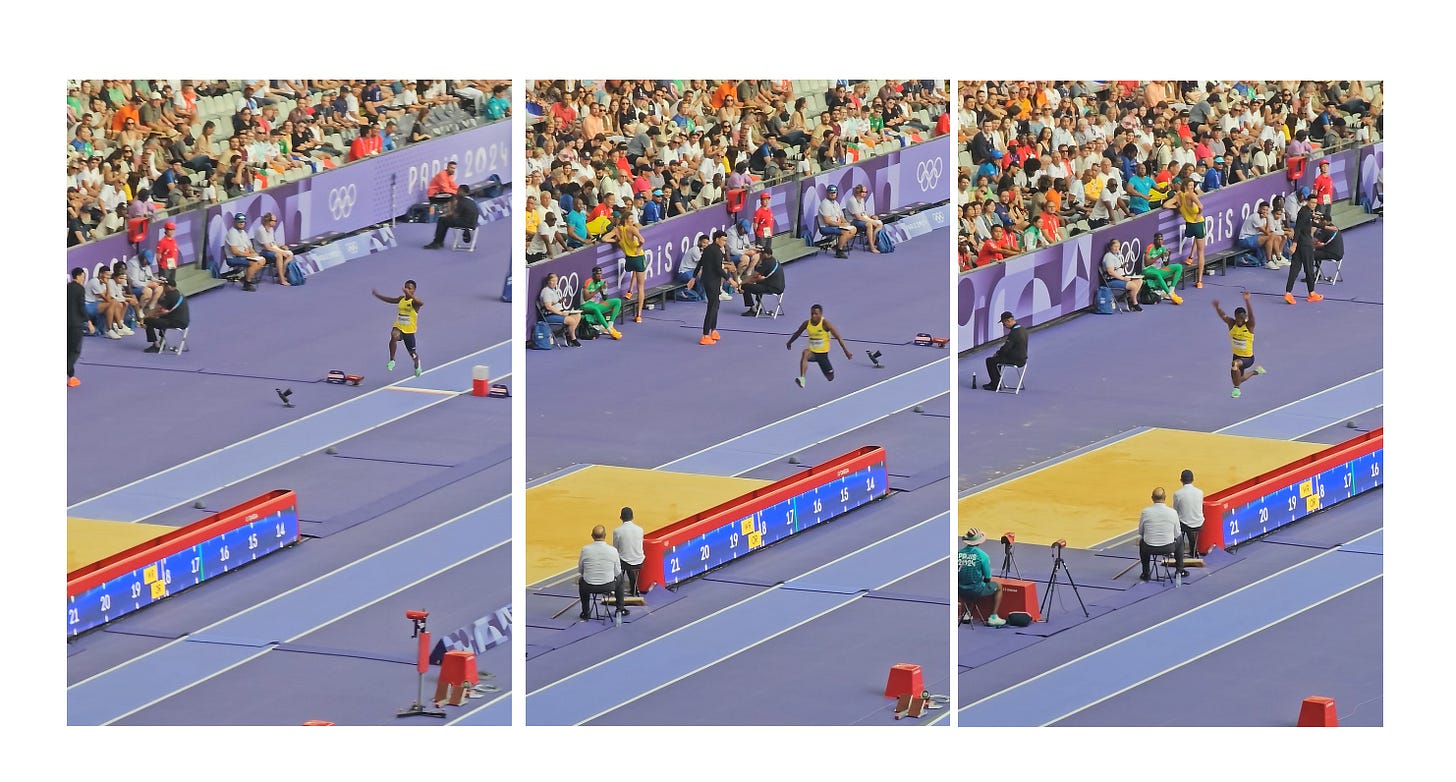

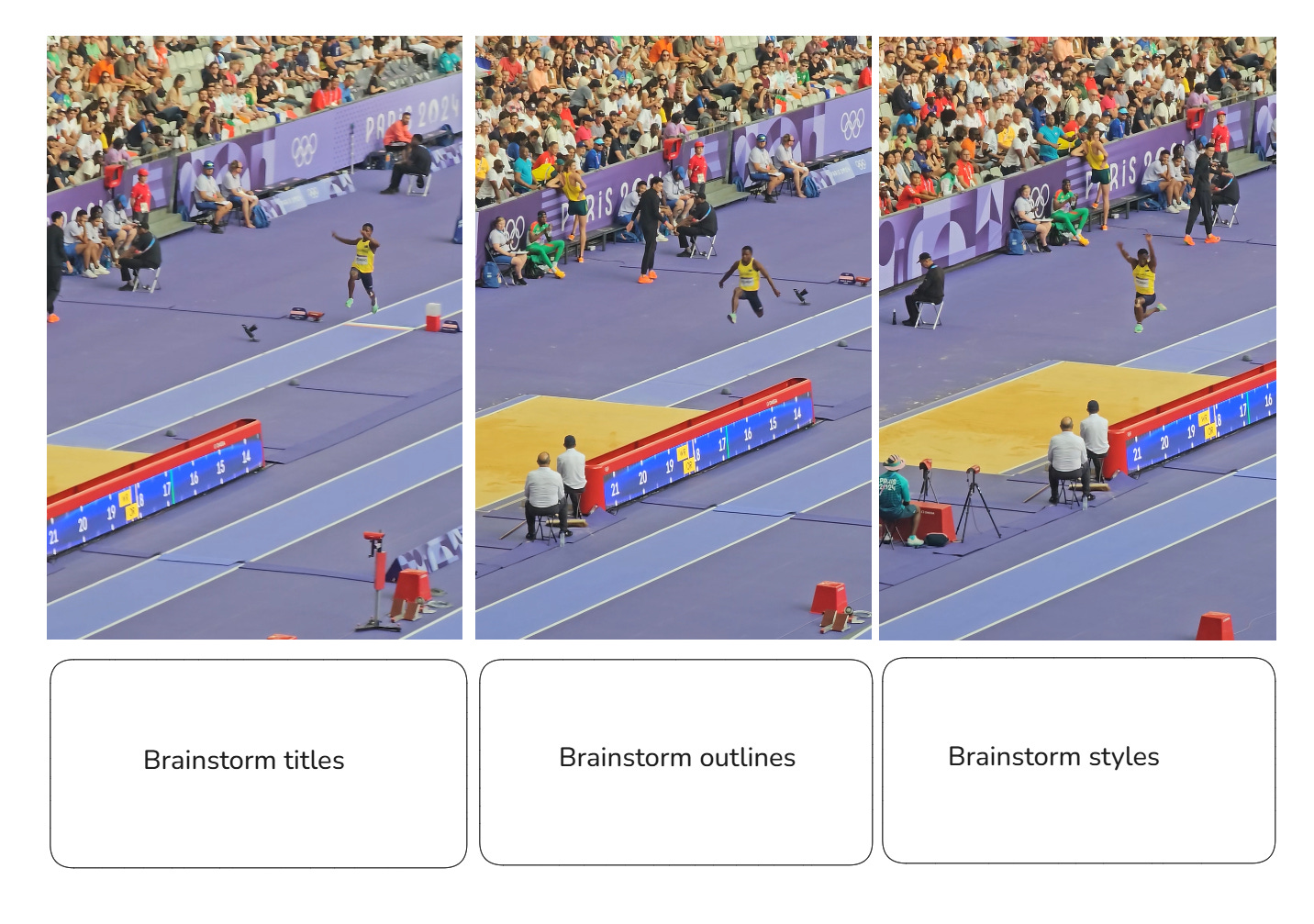

Here’s another trick - think triple jump not long jump

I got to go to the Olympics last year and watch the triple jump.

I like to think of this analogy when using AI.

Rather than saying, “Write this piece of content for me” - the equivalent of doing long jump, break things down into stages.

So in this case, if we want to write something

Step 1 is to brainstorm 10 different titles

Step 2 is to select the best 3 of those titles and then generate 3 different outlines of each

Step 3 is to brainstorm different writing styles it could be done in.

This technique can be used in all sorts of areas

When you are making a presentation

When you are trying to understand a concept

When you are asking for advice

Basically, by breaking the task down into smaller chunks you are going to propel further forward as you are using more intelligence and surface area in your query.

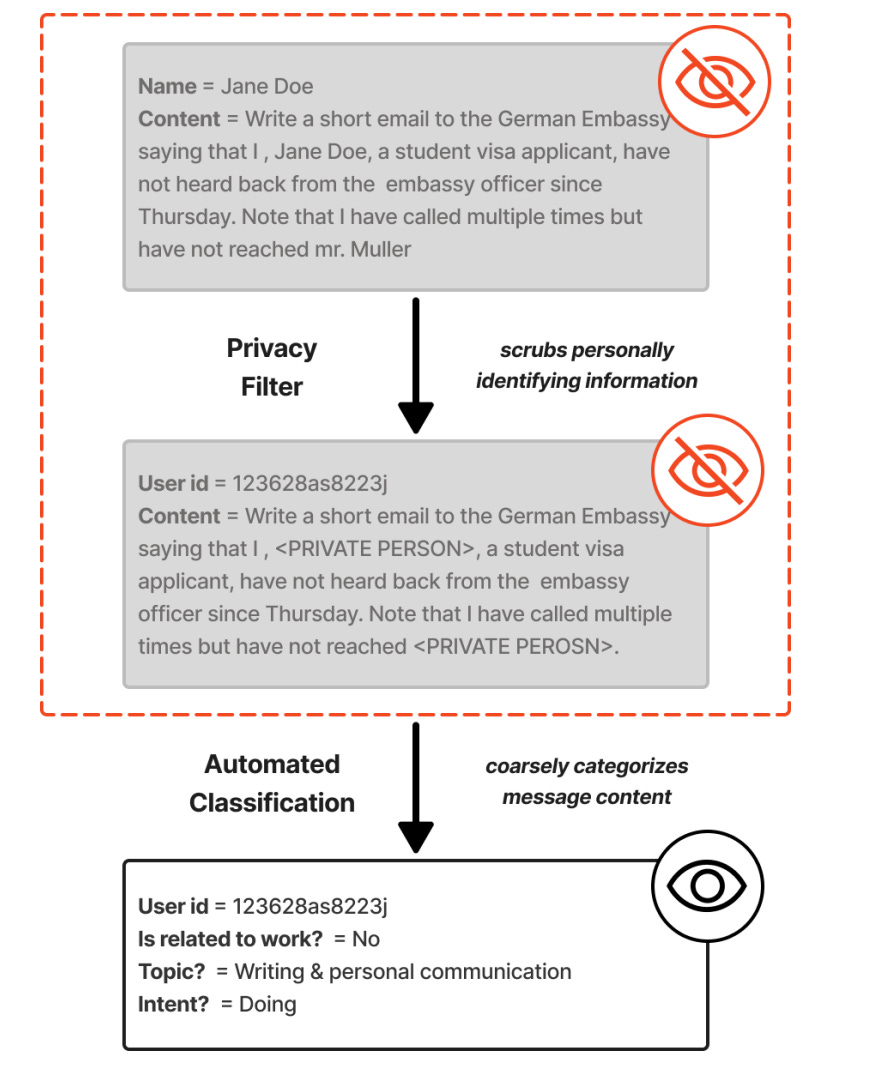

Hey security lovers - frame this image please

A large number of people wonder how security works with AI. I think this graphic is a really good illustration that shows how Harvard cleansed the data before it was analysed.

I want to print out this graphic and send to every board room in the world. It just shows you how you can actually make data sensitive by removing information.

Now it’s time for book club

Thinking in Bets

Thinking in Bets by Annie Duke reframes decision-making as a game of probabilities rather than certainties.

I liked it, because she is a Poker Player and she has this great question:

What’s the best decision that you made last year and what’s the worst decision?

Long story short, most people will answer that question with the outcome of the decision and not the decision itself.

For example:

Hiring: You hired a candidate after a thorough process (references, interviews, skills test). Six months later, their partner got a job overseas and they resigned.

Was hiring them a good decision?

Yes it was, however studies show, most people will say no, due to how it worked out.

Read the book to see why this is a majorly flawed way to view the world.

You can get the book here. Thanks to Robbie for the recommendation.

Paid Subscribers

As a thank you, all paid subscribers can book a consulting call with me. It’s a chance to dig into your project, your questions, or your ideas—and right now, it’s priced well below my normal hourly rate.

If you haven’t taken me up on this yet, just email me at chris@cubdigital.co.nz to set up a time. These conversations have been a real highlight for me—I’ve loved hearing about what you’re building, and often I leave with as many insights as you do.

Want more AI tips?

Other articles I wrote this week

Why Every ‘I Built an App with AI in One Day’ Post is Lying to You

Stop styling your websites — Claude Code is 3x faster without it

How Warp.Dev made my project setup 10x faster (no exaggeration)

50% of you think Claude is magic & 50% think it’s garbage — Who’s right?

Note: I’m an Amazon affiliate. If you purchase a book, then I make a small commission on the purchase. It helps to support my ridiculously expensive reading habit and is much appreciated. Thanks to the 15 people that bought books last week!

Thanks Chris - makes me think, as always. Undoubtably, the use of AI across the global population is growing at a ridiculously fast rate so not surprised by those numbers.

Also, not really surprised that the use of consumer ChatGPT at home is growing way faster than at work. My understanding from the article is that its not the overall use of AI tools in the workplace that is being outstripped by home usage, it's specifically the use of consumer ChatGPT. A few reasons for this I see are:

- Better understanding of the need for good Governance around the use of AI in the workplace. This is being enacted by the implementation of effective policies and frameworks that promote the use of AI tools, but give businesses necessary control. I don't have any data to back up my claim but my guess is that this is starting to slow down the adoption of Shadow AI (and consumer ChatGPT should definitely be classed as Shadow AI in my book).

- Linked to the above, a more open conversation in the workplace around the use of AI (including commercial risks), driven by targeted security awareness training (amongst other things), leading to a better understanding of the need to protect IP (and not just pump it into the internet) throughout the workforce

- Better adoption of other business specific AI tools (not consumer ChatGPT!) to achieve better defined use cases.

So whilst this paper by Harvard and Duke is not a hype article, and delivers some fascinating results it's highly skewed towards promoting ChatGPT - which is probably not surprising as 6 of the authors have direct links to OpenAI (But very interesting nonetheless!)